Why AI Fails in Operations—and How to Deploy It Where It Actually Works

Why AI Fails in Operations and How to Deploy It Where It Actually Works

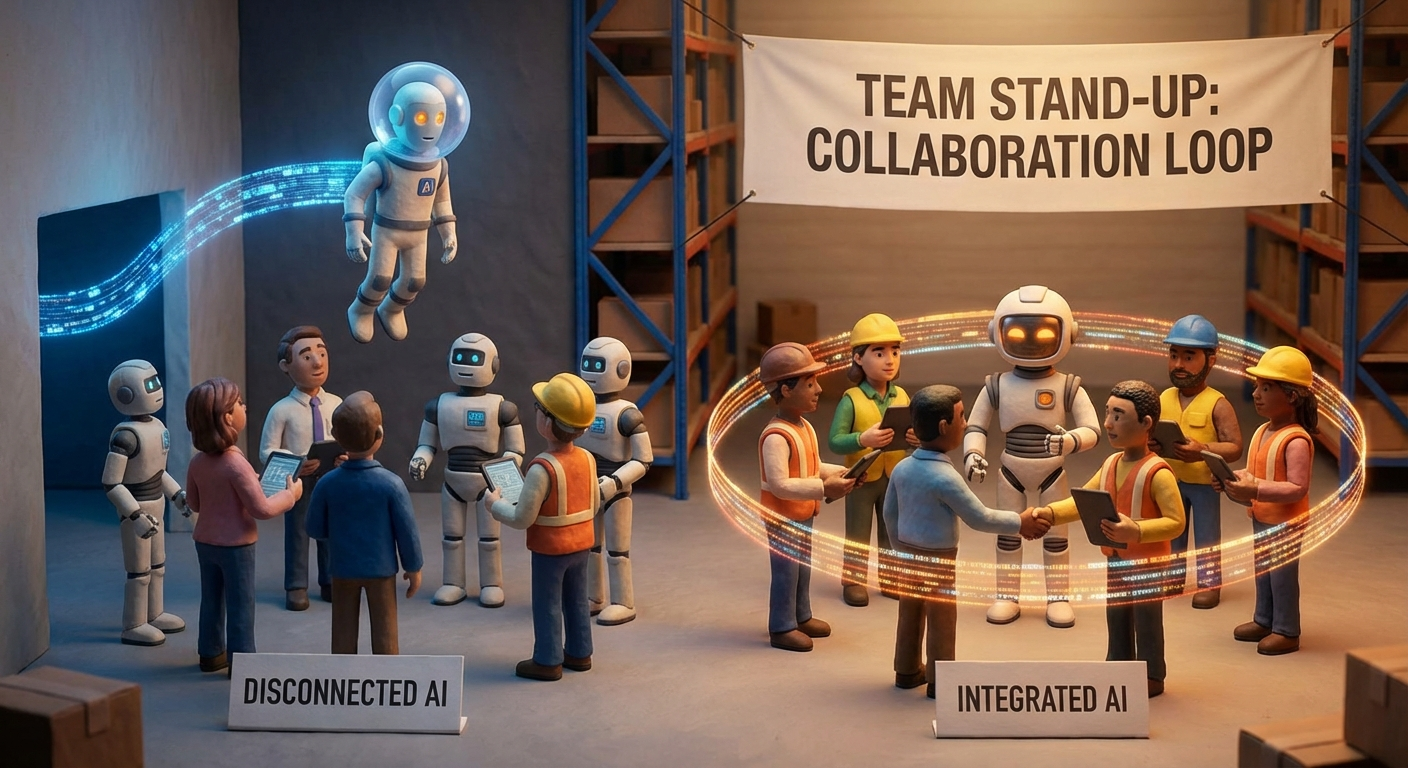

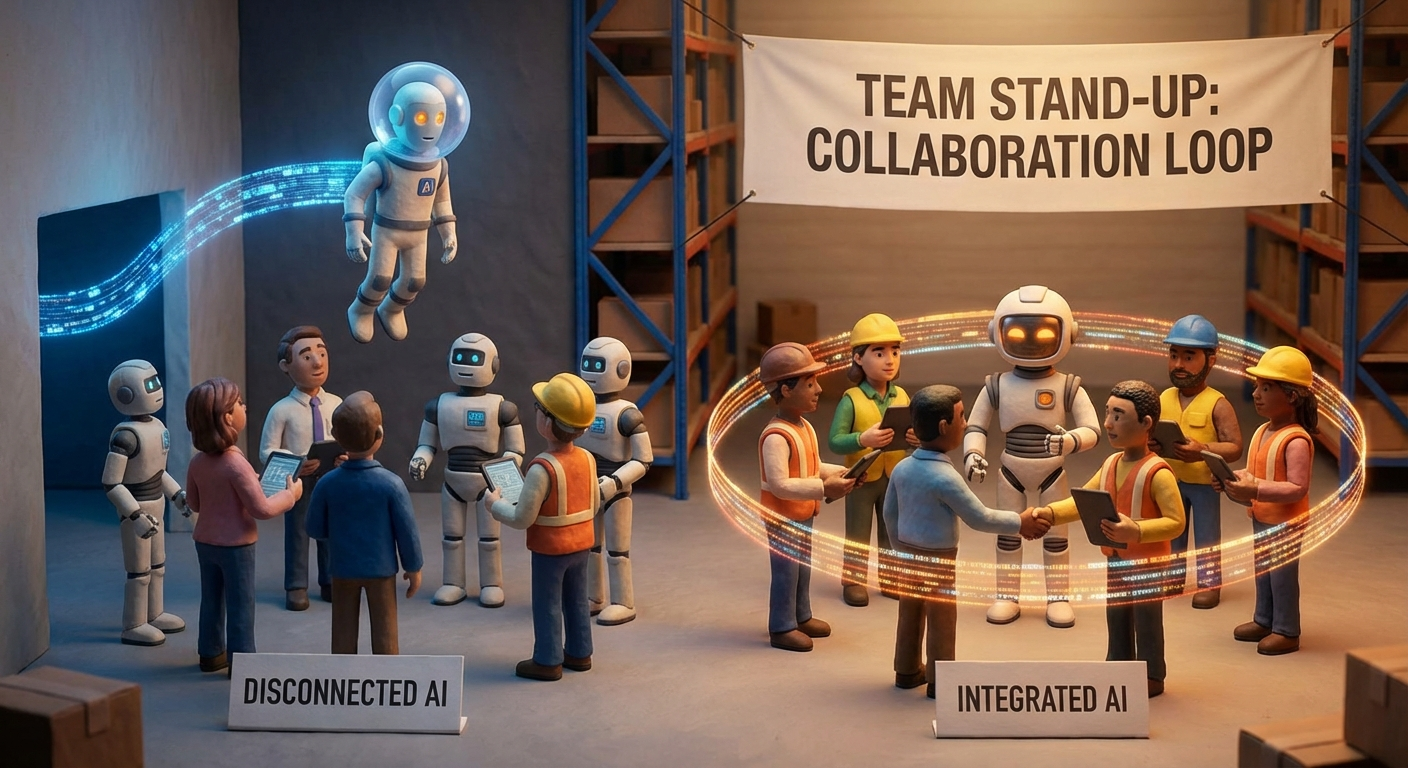

A contrarian take on why generic AI pilots fail in operations and where AI actually delivers value: embedded in daily decision loops, reducing cognitive load, and respecting operational constraints.

Introduction

AI is everywhere in headlines, sales decks, and boardroom conversations. Yet, when it comes to operations, too many AI pilots fizzle out, leaving leaders frustrated and skeptical. The promise meets the messy reality of complex, fast-moving environments where systems and people interact in ways no model can fully capture.

I’ve spent years scaling operations across logistics, supply chain, and eCommerce and I’ve seen a clear pattern: AI rarely fails because of technology alone. It fails because it’s applied in the wrong places and without the operational rigor these environments demand. Generic pilots trying to “solve everything” often miss the mark, creating isolated pockets of automation that never scale or integrate into real decision-making.

The real value of AI lies elsewhere — in tightly scoped, data-rich pockets where it can ease the cognitive load on frontline teams, respect the constraints of operational systems, and embed within daily decision loops. In this article, I’ll explain why AI struggles in operations, where it actually delivers value, and how to build systems that scale it successfully. No hype — just practical insights from the floor.

Why AI Fails in Operations: Common Patterns

Misaligned use cases

Too many AI pilots chase broad “transformation” or generic automation without connecting to a high-importance, high-frequency decision. If the team can’t clarify which decision the model informs, who makes it, when, and what success looks like, the pilot is off course. Research from HBR and McKinsey consistently points to poorly scoped AI projects and fuzzy problem definitions as leading failure factors (see HBR: What Makes a Company Successful at Using AI; McKinsey: Bold accelerators…).

Fragile integration

Operations run on a mix of OT and IT systems — WMS, TMS, MES, ERP, quality systems, plus multiple point solutions. AI that lives outside these systems adds clicks, duplicates data entry, or causes delays. Operators will bypass it. Models need reliable data pipelines in, clear interfaces out, and latency fitting the decision’s cycle time. If insights arrive after the 7:30 production meeting, they simply don’t exist.

Weak cross-functional ownership

Data scientists can build solid models. Engineers can stand up infrastructure. Operations know the constraints. But when no one owns the full end-to-end outcome, models die during handoff. Without joint accountability among ops leaders, engineers, and data scientists, “productionizing” struggles and blame games emerge. HBR’s research highlights this ownership gap repeatedly as a barrier to AI adoption.

Insufficient human-in-the-loop controls

Operational context — supplier behavior, regulations, equipment quirks, night shift realities — is often absent from data. AI that proposes actions without transparent reasoning or straightforward ways for humans to inspect, adjust, or override recommendations either gets ignored or causes trust erosion. Successful systems let people see why a recommendation was made, correct it easily, and feed corrections back into the model.

Result: pilots don’t embed

This pattern is predictable. The pilot runs in isolation. A few champions use it. The broader team sticks to old routines because they’re faster, safer, or tied to existing KPIs. The pilot never reaches full production, or it does but goes unused. Leaders conclude AI is overhyped. The truth is simpler: the system rejected a part that didn’t fit.

Insight: AI failure in operations rarely stems from the algorithm. It’s about alignment with the decision, workflow integration, and fitting the system’s real constraints.

Where AI Actually Delivers Value in Operations

Bounded, data-rich, repeatable processes

AI excels when the objective is clear, inputs are stable, and results measurable. Examples include SKU-level demand forecasting, quality inspection from consistent image streams, and predictive maintenance based on reliable sensor data and known failure modes. These environments provide enough signal and a clear “better” definition.

Embedded in daily decision loops

The real test: who uses the AI tool, when, and how does it improve or shorten an existing decision? For instance, a forecast helps only if it feeds labor planning by shift, slotting by zone, and replenishment within delivery windows. On production lines, anomaly detection matters if it triggers operator checks without halting everything. AI shouldn’t create new meetings or dashboards; it should feed existing ones.

Reduces cognitive load

Frontline teams juggle constraints, service levels, and exceptions constantly. AI that adds clicks or demands new inputs competes with urgent work. Instead, reducing choice complexity, flagging likely exceptions, and proposing sensible defaults saves time and improves outcomes. That is the standard to meet.

Respects operational constraints

Systems have hard bounds — cycle times, downtime, labor laws, carrier cutoffs, compliance, safety. AI recommendations outside these constraints generate waste and risk. Models trained and constrained within feasible regions produce actionable guidance. This is not a limitation but essential for adoption.

Robust pipelines and MLOps

Operational environments change — new products, seasonality, suppliers, process tweaks. Without monitoring, drift detection, and retraining, models degrade and users lose trust. MLOps — covering data quality checks, versioning, rollback procedures, and incident ownership — is not optional but necessary for sustainable AI in operations.

Human-in-the-loop governance

The most successful systems combine operations expertise, engineering reliability, data science tuning, and executive oversight. Auditability — explaining why the system made a recommendation — is a requirement, not compliance afterthought. This transparency builds trust and accelerates adoption. HBR and MIT Sloan underscore the importance of cross-functional governance.

Insight: AI success depends on tight systems integration, repeatable decisions, and people who own outcomes. It’s not magic but disciplined engineering aligned with operational realities.

Building Systems That Scale AI in Operations

Cross-functional teams

Form a standing team with clear ownership: an operations leader empowered to make changes, a process engineer embedded in the workflow, a data scientist fluent in decision boundaries, and a software/infra engineer building pipelines. Their explicit goal: move one operational decision from manual to AI-assisted with measurable impact.

Center of Excellence (CoE)

With early wins, establish a CoE to codify AI practices — data standards, deployment patterns, monitoring, change controls, security, and privacy. The CoE serves as an enabler, accelerating new use cases and preventing reinvention of foundational work.

Clear metrics and incentives

Define and monitor KPIs directly linked to operational outcomes. For example, measure forecasting error at the granularity that impacts decisions, track false positives and negatives in inspection to minimize line slowdowns and defects, or monitor downtime and repair times in maintenance. Critically, align incentives so operators and leaders benefit when AI improves outcomes.

Change management

Adopt AI like a product rollout: train users, shadow decision points, collect feedback, and iterate. Highlight where AI saved time or prevented errors. Ensure it’s safe and straightforward to override AI recommendations. Use "gray mode" periods where AI suggests but humans decide to build trust before making AI the default.

Governance and auditability

Maintain thorough documentation of data sources, model versions, and decision logs. Set policies for automated actions versus manual approvals that suit the organization’s risk posture. Make it easy to answer audits like, “Why did we do X?” after the fact.

Realistic scaling

Scale only after proving the solution under real load and variability. Expand incrementally to similar sites or processes instead of broad rollouts. Success depends on repeatability and integration, not on flashy presentations.

Insight: Scaling AI in operations is a systems challenge. Build foundational components once, reuse broadly, and expand at a pace reality allows.

Practical Steps to Deploy AI Where It Actually Works

- Use a narrow lens: Identify a specific, high-value decision loop that is data-rich, predictable, and tied to business outcomes — e.g., replenishment timing for certain SKUs, QC pass/fail on a specific line, or maintenance triggers for critical assets.

- Assemble an accountable team: Include an operations lead, process engineer, data scientist, and software/infra lead with authority over process changes.

- Integrate models into existing workflows: Connect with systems operators already use (WMS, MES, ERP). Present recommendations at the point of decision — during shift meetings or on operator screens.

- Design human-in-the-loop controls from the start: Provide clear recommendations, confidence scores, and one-click overrides. Use overrides as feedback for model training.

- Measure what matters: Track KPIs linked to operational goals such as service level, cost per unit, throughput, downtime, and right-first-time delivery. Also measure time saved and error reduction.

- Invest in MLOps early: Implement data quality checks, model monitoring, drift alerts, and retraining pipelines. Version control and rollback procedures are essential.

- Manage change like a product: Train users, shadow workflows, publish wins, and build trust before setting AI as the default.

- Scale only after stability: Replicate to comparable areas once the model maintains performance under variability. Use the CoE to standardize schemas, deployment, and governance.

These steps align with best practices from MIT Sloan and HBR: start with a real, bounded decision; build cross-functional ownership; integrate tightly into workflows; govern deliberately; and scale methodically.

A Brief Example from the Floor

At a mid-market logistics provider, the challenge was volatile labor planning and replenishment. Rather than “do AI for everything,” we focused on scheduling — how many people to assign by zone over the next three shifts. We trained a forecast on SKU arrivals and order mix, but the real win was where we put it: inside the 7:30 a.m. huddle, printed on a simple page with three clear actions. Supervisors could accept, adjust, or reject with reasons. We measured schedule adherence and overtime impact. Overrides fed back into model training, and improvements accumulated weekly. The team didn’t feel replaced — they felt supported. This is the pattern to watch.

Respecting Constraints Changes the Outcome

The difference between a toy and a tool is whether the system respects constraints. In fulfillment, carrier cutoff times are hard walls. In food logistics, temperature controls set boundaries. In healthcare, compliance is non-negotiable. AI that “optimizes” beyond these boundaries creates costly rework and risk. AI that works within them unlocks practical options — earlier picks for late orders, smarter slotting for heavy items, better batching that reduces travel without missed cutoffs. Constraints are not nuisances but define the feasible solution space.

What This Looks Like in Legacy Environments

Legacy operations are layered systems shaped by decades of history. At All Points, a 30-year-old logistics company I’m helping modernize, success isn’t about chasing the newest model. It’s about clean interfaces, consistent data definitions, and placing recommendations exactly where planners already decide. Novelty doesn’t earn points — fewer expedites, smoother shifts, and better service do. AI helps when it is a component embedded in that broader system, not the narrative.

The Role of Leadership

Executives often ask, “Should we be doing more with AI?” The better question is, “Which decision, if improved by 10–20%, meaningfully impacts cost, service, or risk — and do we have the data and workflows to support it?” Leadership’s job is to pick that decision, set clear KPIs, assign ownership, and create space for iteration. It’s also to say no to pilots unlikely to survive first contact with the floor.

Common Pitfalls to Avoid

- Deploying black-box AI without operator visibility or override capability

- Creating parallel workflows instead of integrating with existing ones

- Treating MLOps as an afterthought rather than foundational

- Tracking technical metrics (AUC, MAPE) without linking to business outcomes

- Scaling before the model proves stable under live variability

- Failing to align incentives — forcing adoption when tools slow measured throughput

Conclusion

AI’s misses in operations aren’t technology failures. They are failures of fit. Pilots fail when chasing generic promises, existing outside workflows, and ignoring constraints and incentives shaping daily work. AI succeeds when embedded in specific decision loops, reducing cognitive load and respecting system limits. This looks like better forecasts at actionable granularity, inspections where false alarms are controlled, and maintenance triggers operators trust.

Looking ahead, integration will improve, ownership will clarify, and data, deployment, and governance practices will standardize. But operations remain complex, with human judgment under constraints as the core reality. AI will accelerate and refine those judgments where it fits — but won’t replace systems thinking or leadership.

If you want AI to work in operations, start small, focus on a real decision, and build the system around it. Then do it again. That’s how you scale.

Disclaimer: This article is for informational purposes only and reflects the author's professional experience and insights. It does not constitute professional advice. Readers should evaluate AI tools and strategies in their own operational contexts and consult appropriate experts before making implementation decisions.

Discover why AI often fails in operations and learn how to successfully deploy it by embedding in decision loops, reducing cognitive load, and respecting constraints.

.png)